November 14, 2025

Mastering Apple Intelligence in macOS Tahoe

AI has been evolving at an extraordinary pace. Microsoft and others have already made it a core part of their systems, while Apple seems to have taken a quieter, more conservative route. For years, its progress in this area went mostly unnoticed, giving the impression that macOS was falling behind.

With macOS Tahoe, however, Apple has finally taken a clearer step toward integrating AI into everyday use.

In this article, I’ll take a close look at how Apple Intelligence works across macOS and see what’s genuinely useful, what still feels experimental, and whether it can make your daily tasks any easier.

What is Apple Intelligence in macOS Tahoe

Apple Intelligence is Apple’s new suite of built-in AI features that bring generative tools, language understanding, and smart actions directly into macOS. In practical terms, it lets your Mac analyze and summarize text, translate content, generate ideas, and even perform context-aware tasks inside apps. One of its central components is the native integration of ChatGPT, which can be used right from within system tools and apps without switching between windows.

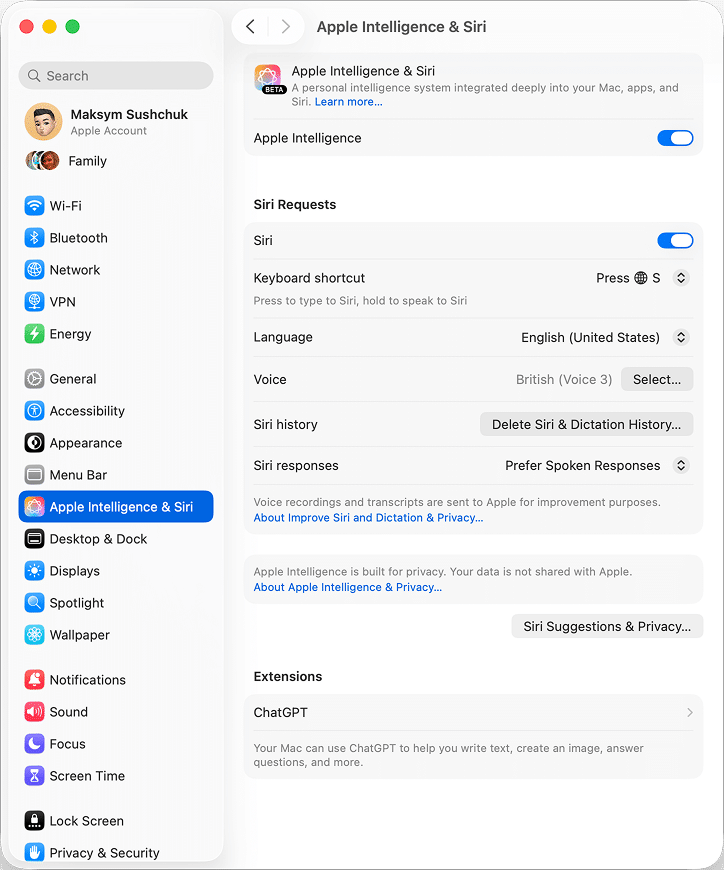

Apple positions Apple Intelligence as a privacy-first system. Your data is processed on-device whenever possible, and nothing is sent to the cloud without your explicit permission. However, the available features depend on your Mac model, region, and system language. The full experience is currently limited to Macs with Apple Silicon processors and when the system language is set to English (United States).

How to enable Apple Intelligence in macOS

Note:

Apple Intelligence is not yet available on all Macs or in all languages and regions. To access the latest features, install the newest macOS Tahoe update.

To enable Apple Intelligence, go to System Settings → Apple Intelligence & Siri → Turn on Apple Intelligence.

In this window, you can also connect ChatGPT on macOS. For this:

- Scroll down and locate the Extensions section.

- Click the arrow next to ChatGPT.

- Choose how you want to use ChatGPT – either by connecting your account or using it anonymously.

For full integration and access to advanced responses, you’ll need a paid ChatGPT subscription. If you have it, connect your account to enable all features of ChatGPT in macOS.

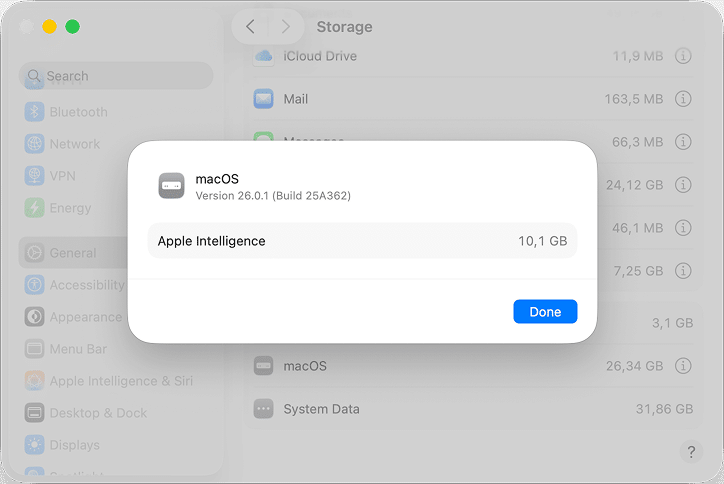

Does Apple Intelligence take a lot of space?

Apple Intelligence isn’t lightweight. Apple officially mentions around 7 GB as the baseline, but real users often report higher numbers.

To check how much space Apple Intelligence occupies, open System Settings → General → Storage, then click the “i” icon next to macOS. Here you’ll see a separate entry for Apple Intelligence and related components.

On my Mac Mini M4 Pro, it takes 10 GB of space.

On Apple discussions, people note up to 13 GB consumed, even when it is turned off. I guess the difference depends on your hardware and how much cached data the system keeps.

If your storage is running low but you still want to try Apple Intelligence, consider cleaning up unnecessary files before enabling it. MacCleaner Pro by Nektony can help you safely reclaim several gigabytes by removing system junk, caches, and other clutter that quietly accumulates over time. Freeing up space first ensures the AI features install smoothly and your Mac stays fast and stable.

Top ways to use Apple Intelligence on Mac

Apple Intelligence in macOS Tahoe spreads across the entire system and does its best to add flexibility and responsiveness to the tools we’re already familiar with. But does Apple succeed at making AI actually helpful in your everyday tasks?

Let’s explore and find out!

Translation and faster replies in Messages

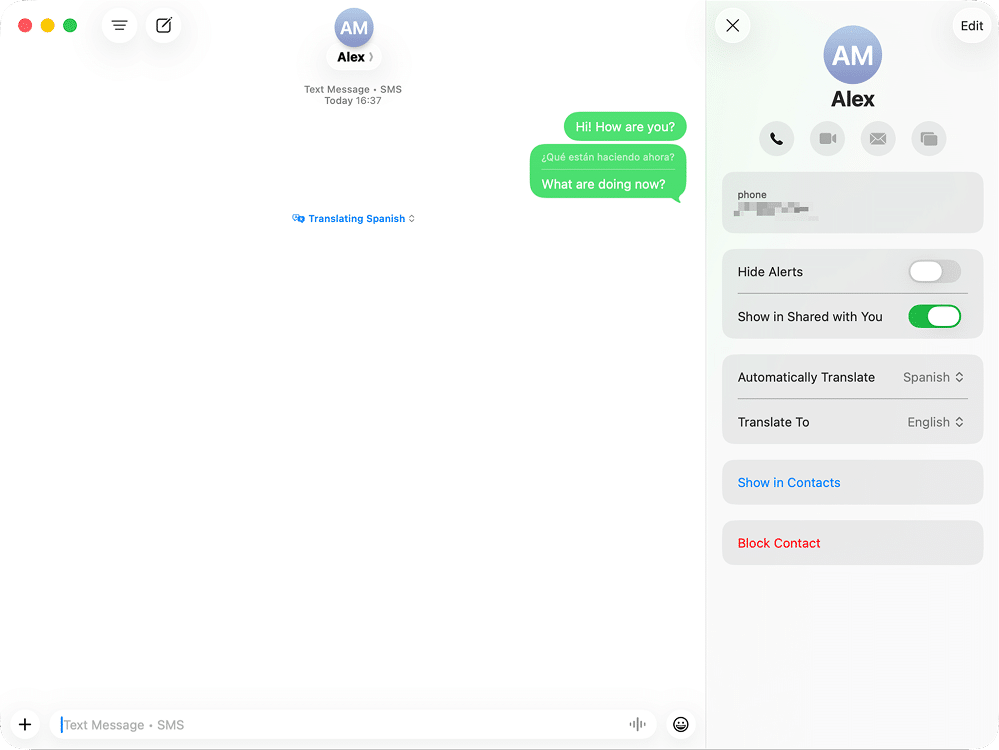

Apple Intelligence brings live translation and smart reply suggestions to Messages.

The Live Translation feature automatically converts your text while you type or read messages in another language.

To enable it:

- Open the Messages app.

- Click the contact’s name at the top of the chat.

- Open their card.

- Switch on Automatically Translate.

- Select the source and target languages.

The feature also filters spam and suggests quick, context-aware responses, but I haven’t had a chance to see it in action yet.

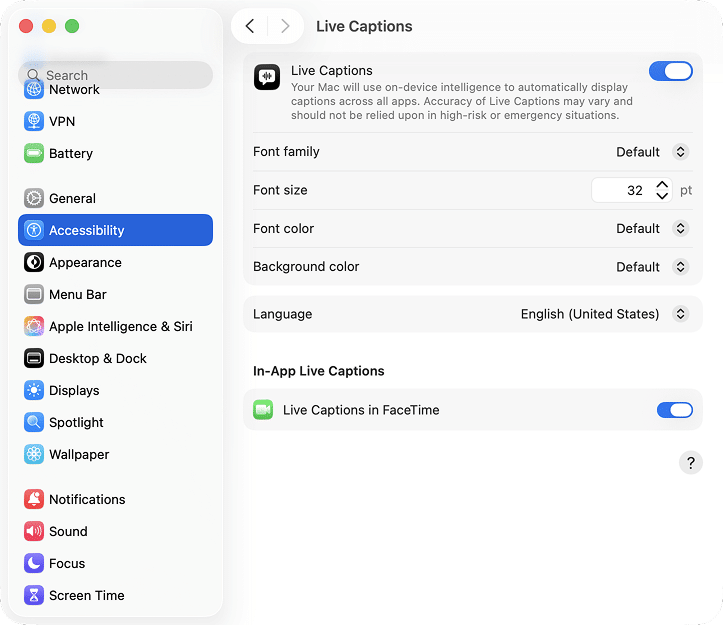

Real-time captions in FaceTime and Phone

FaceTime and the Phone app now include Live Captions that can also translate spoken text in real time. During calls, you can read the translation right on screen or listen to it as audio.

You can enable or disable Live Captions in System Settings → Accessibility.

The feature is still a bit unstable and sometimes fails to display correctly, but its intent is clear. It makes calls and media more accessible, especially for people who rely on captions or translation for better understanding.

For me, though, live captions in FaceTime and during phone calls is a rare need. Most of my conversations happen in English or my native language, and when I talk with international contacts, I most often use Google Meet or Zoom.

Still, it’s a nice convenience for those who often message with other Apple users across languages.

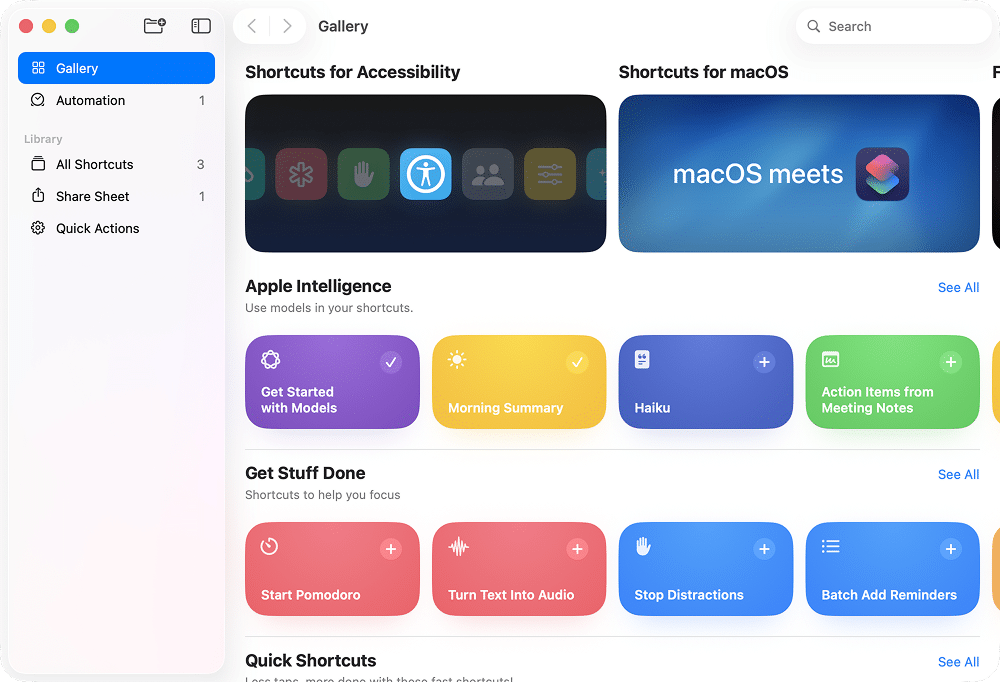

AI Actions in Shortcuts

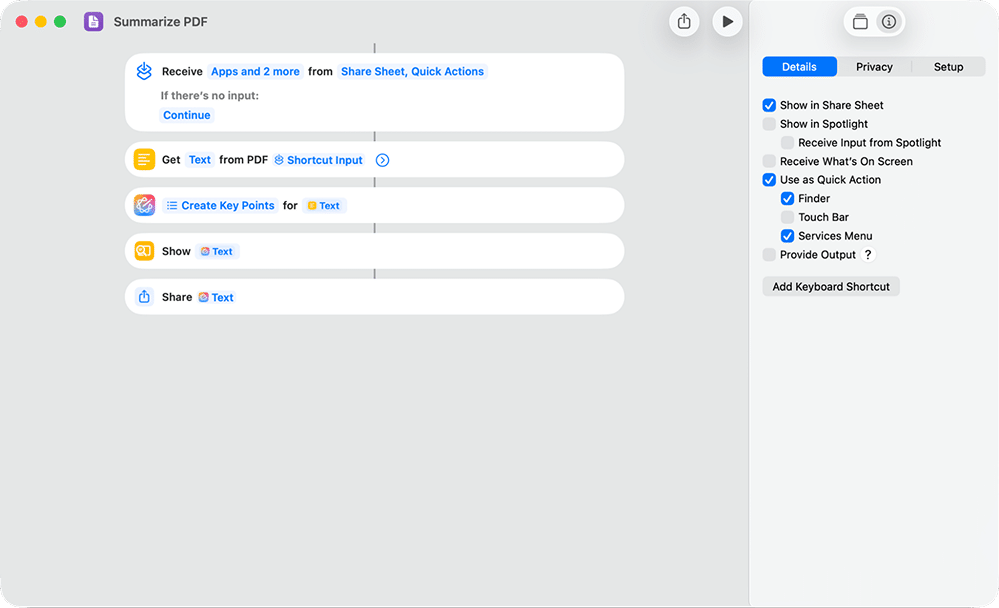

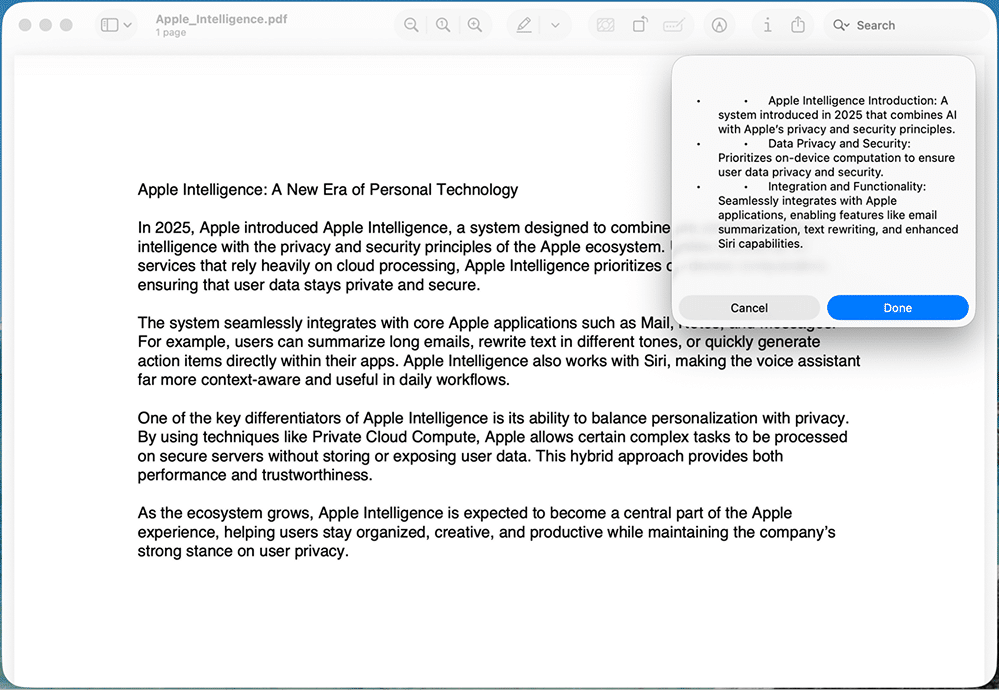

Shortcuts have become significantly smarter with Apple Intelligence. Now you can create automated actions such as text summarization, image generation, or even invoking local AI models for specific tasks.

A great example is Summarize PDF, which lets you preview a document’s key points without opening it.

Add it to Quick Actions, and you’ll see a two-sentence summary with just a couple of clicks.

You can also connect AI to triggers such as plugging in an external monitor or saving a file. For someone like me who automates workflows daily, this is a welcome improvement.

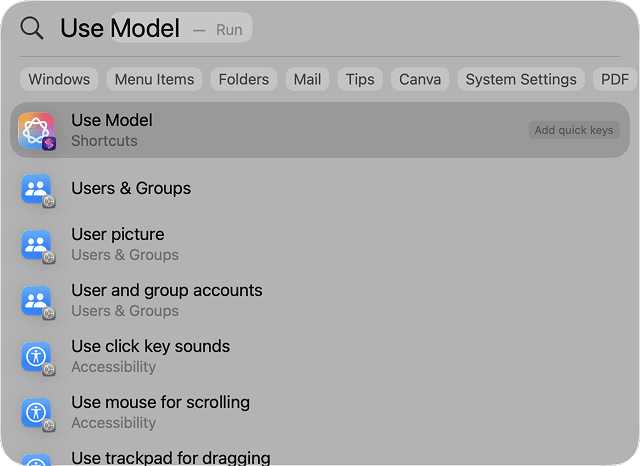

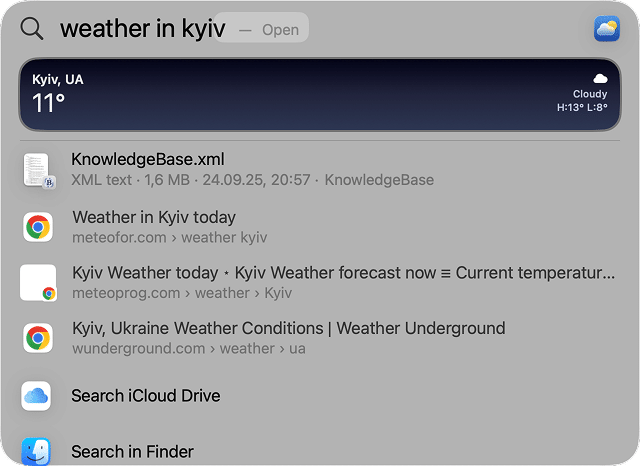

Smarter search in Spotlight

Spotlight in macOS Tahoe has evolved from a basic search tool into something closer to an assistant. It now offers smarter result ranking, contextual actions, and AI-based queries.

Use Model

Copy

You can also query weather, calendar events, or definitions right from the search bar.

Although Spotlight is more useful now, I still find Raycast quicker and more powerful. But if you prefer built-in macOS tools, this version finally feels responsive enough to replace simple search utilities.

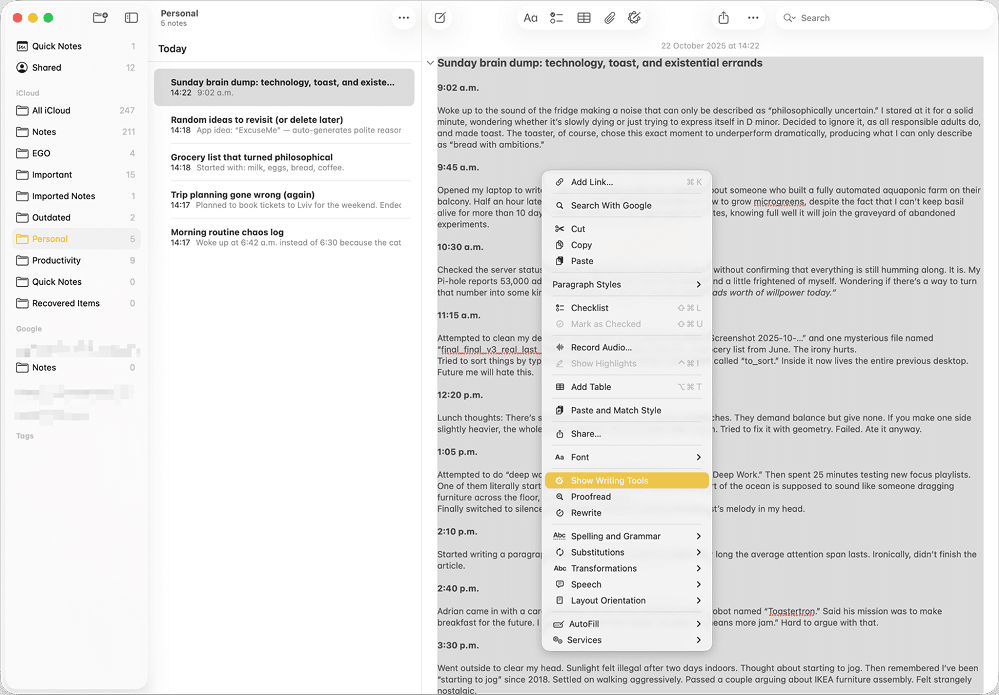

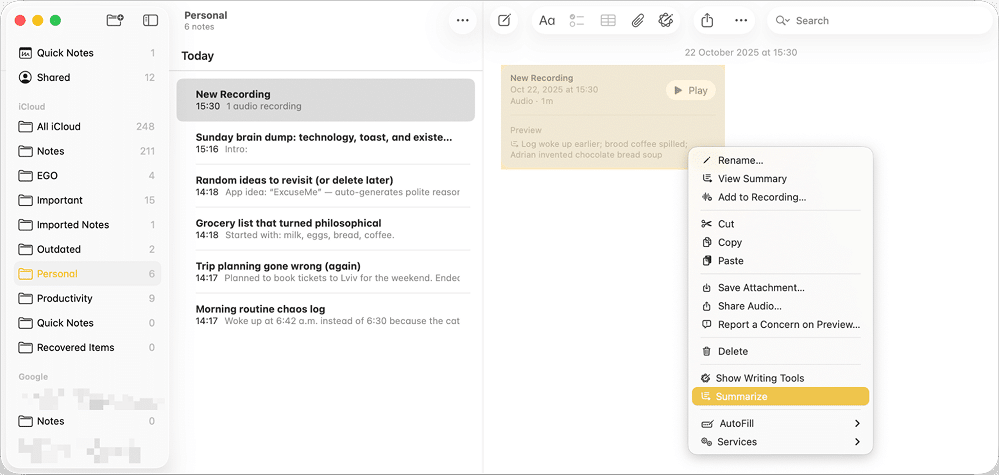

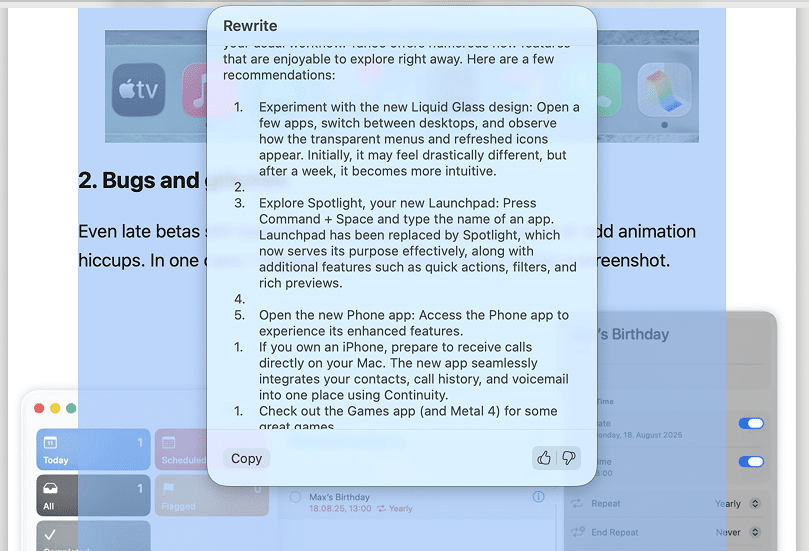

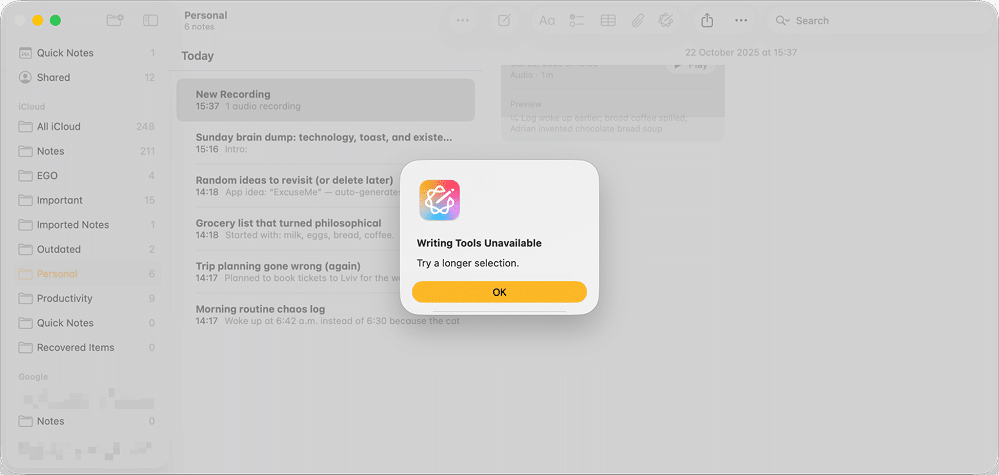

Writing Tools

Writing Tools appear throughout macOS in apps where you can edit text. They can rephrase, summarize, or change tone within Mail, Notes, or Pages and are available via the context menu (the one that appears when you right-click the text). You can make your message sound more formal, concise, or friendly in seconds.

To make use of Writing Tools:

- Open, for example, Pages, Notes, or TextEdit.

- Select the text you want to process and right-click on it.

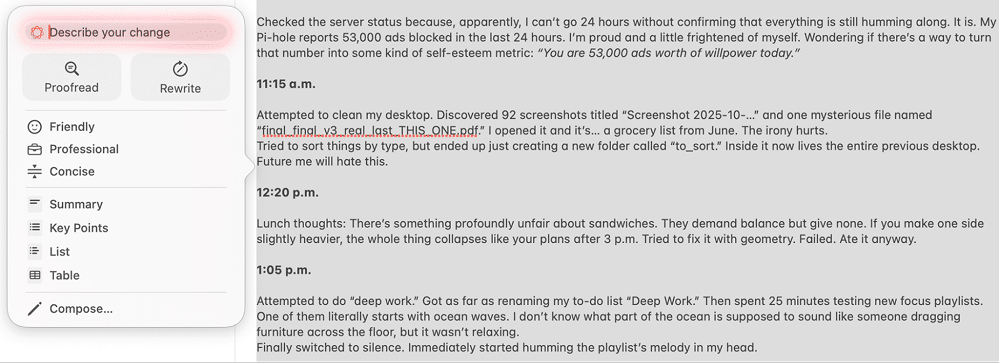

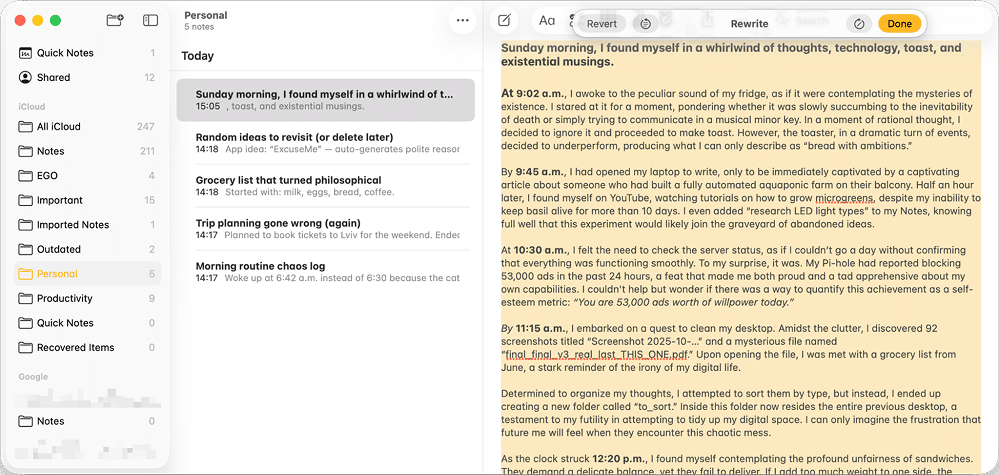

- In the menu, select Show Writing Tools. This will open an extra menu with writing tools.

- Apple expects that the most used writing tools are proofreading and rewriting, so in the Writing Tools menu, the Proofread and Rewrite buttons are the biggest. (You can also see those options in the right-click menu of a selected text next to Show Writing Tools.)

Alternatively, you can type in the change you want to make, or select the option to make the text more Friendly, Professional, or Concise. When you use those options, the original text gets changed and a little panel appears on the top of the text. With it, you can Revert the changes, see the original, or re-apply the same effect to the text one more time. - If you’re happy with the outcome, click Done.

- With the Summary or Key points options, you will be able to review the suggest summary or list, and then replace the original text with it or copy into a buffer.

- At the bottom of the Writing Tools menu, you can also see the Compose option. This is where ChatGPT can make any change to the text you’ll say, and you control the context it has at hand. For instance, you can supplement it with your writing guidelines or previous notes to catch your writing style and add an extra bit of information to your text.

In my opinion, it’s a clever idea, though the execution is still uneven. When I tested it, summarization sometimes misformatted text, created faulty lists, or left awkward spacing.

It looks like it works best for short pieces that need polishing but struggles with longer documents.

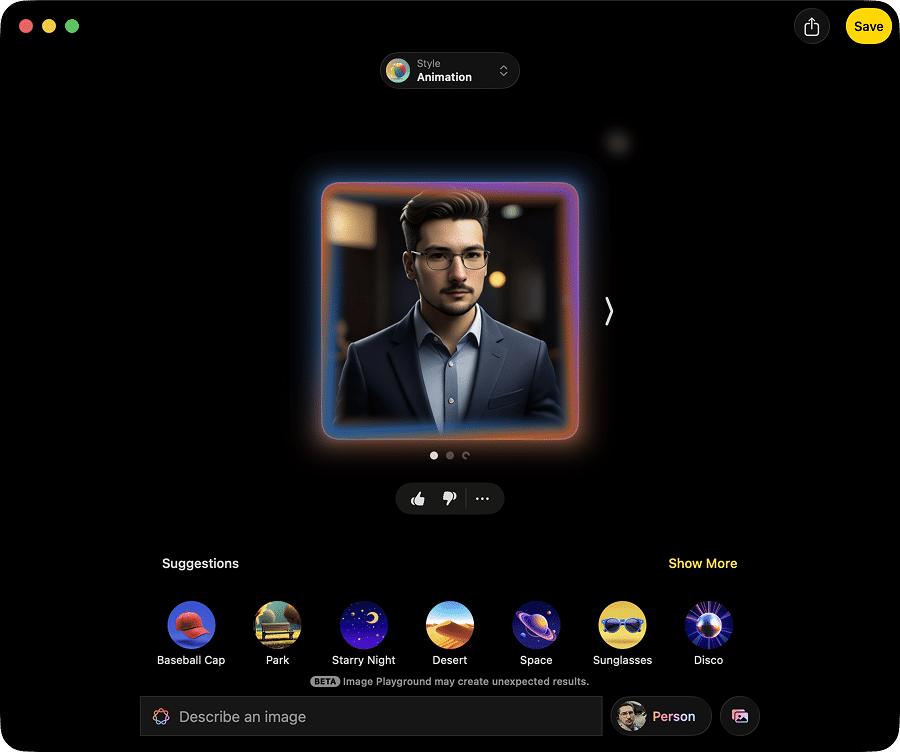

Visuals generation via Image Playground

Apple’s Image Playground app lets you create images, emojis, or stickers directly from text prompts. You can adjust expressions, add accessories, or choose styles like “ChatGPT-style.” The feature was earlier criticized for its primitivity, and it still feels lighthearted and creative rather than professional.

The results look fine for casual use, though they’re far from what the latest AI generators can produce. Still, for quick visuals or personalized emojis, it’s an entertaining tool to have built into macOS.

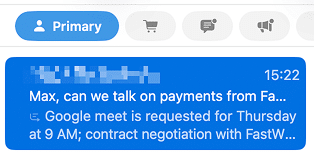

Summarization and faster replies in Mail

The Mail app uses Apple Intelligence to summarize long emails and suggest quick replies. It scans messages, highlights key information, and proposes one-tap answers. This works well for status updates or newsletters, where you only need a quick overview.

Here’s how to summarize letters in Mail after you’ve made sure you have Apple Intelligence enabled:

- Open an email you want to have summarized.

- Locate the new Summarize button and click it.

- Review the summary.

I also noticed that in some cases, the email preview has a short summary. That’s helpful, even though not very predictable.

In my workflow, the summaries are helpful for speed but don’t always catch the nuance of personal emails. For routine correspondence, however, it’s a clear time-saver. And I couldn’t check out the quick reply functionality. Perhaps it only works in certain circumstances that I am unaware of.

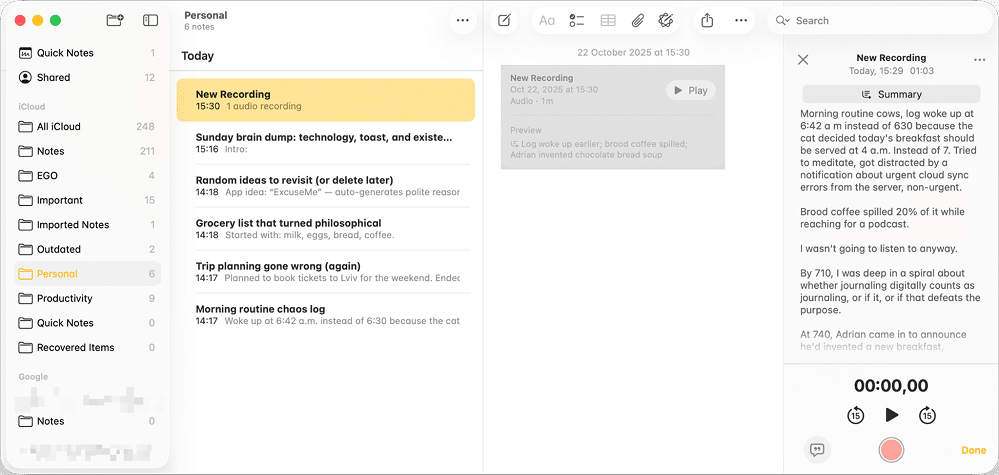

Voice memo transcribing in Notes

Apple Intelligence adds transcription and summarization to the Notes app. When you record a voice memo inside a note, the system automatically creates a text version and a short preview of what was said. This can be useful during offline meetings or brainstorming sessions.

To use them:

- Create a voice recording in the Notes app.

- You can now double-click it to see the automatic transcription of the text and the Summary button.

- In this transcription pane, you can double-click any word to quickly navigate to this part of the audio recording and listen to it.

- The recording will have a short summary in its Preview, and you can also use Summarize via the right-click menu to try and get a full summary.

I had great expectations about it, but ran into issues here. Transcription has multiple mistakes and is not even close as good as the one produced by OpenAI’s Whisper; and summarization sometimes failed, returning an error message instead of text.

Still, when it works, it’s a practical upgrade that can turn Notes from a digital notebook into a knowledge hub.

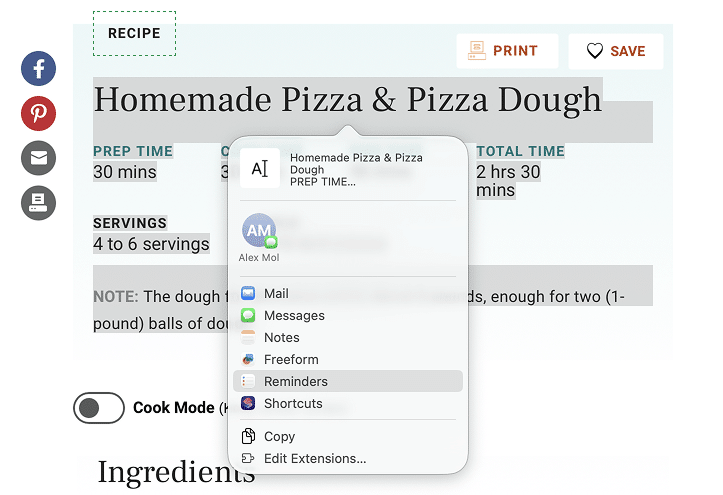

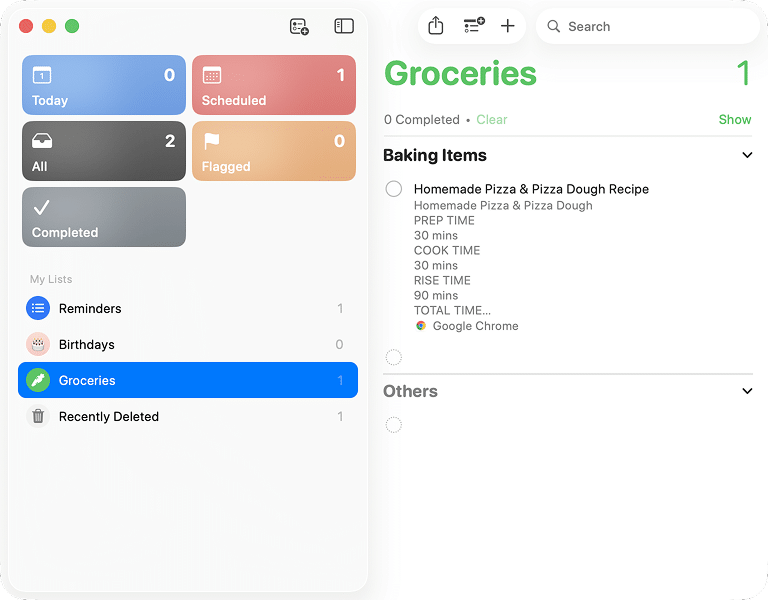

Reminders let you create tasks from text

Apple Intelligence now recognizes potential reminders in your text. Highlight a sentence or paragraph on a website, choose Share → Reminders, and macOS turns it into a reminder with a link and even suggests a relevant group.

It’s a smart concept, but it takes some time to discover how to make the most of it.

Once you get used to it, though, it becomes a subtle productivity boost.

Summaries (again!) in Safari

Safari gains AI-powered webpage summaries and integrates Writing Tools for quick text edits. You can open a long article in Reader mode and ask for a summary to see what it’s about before reading in detail.

I personally prefer scanning articles manually to extract the information I need, but I can see the appeal for users who process large amounts of reading material daily. It’s another small but practical upgrade that fits neatly into Apple’s ecosystem without the need for a third-party AI-first browser like Atlas or Comet.

Under the hood of Apple Intelligence

While exploring these features and reading Apple’s documentation, I began to see how Apple Intelligence actually fits into macOS.

You might have noticed that some AI features look familiar across different apps. The same writing tools that help you rewrite text in Mail also appear in Notes, Safari, and even some third-party apps. That’s because they all rely on what Apple calls the Foundation Models Framework, a shared system that lets both Apple and outside developers use the same on-device AI models for tasks like summarizing, rewriting, or proofreading. It’s Apple’s way of turning intelligence into a built-in macOS layer rather than an optional upgrade.

Some independent developers have already started experimenting with it. For example, the journaling app Day One now includes Apple’s writing tools directly inside its editor. Clearly, not everyone is on board yet, but Apple seems committed to making this framework the future default for how apps interact with its AI system.

When your Mac needs more power than its local model can handle, it temporarily reaches out to Apple’s Private Cloud Compute. It’s like a cloud extension designed for privacy rather than data collection. Every request is processed on secure servers with hardware-level encryption and transparency logs, so Apple can’t see what’s being analyzed.

From a technical point of view, the system is surprisingly elegant. The models are small enough to fit in memory, and macOS splits the load between the CPU, GPU, and Neural Engine to deliver responses in real time. You can actually see this when Apple Intelligence starts typing out a summary or suggestion before the full text is ready.

Closing Note

After spending some time with Apple Intelligence, I’m left with mixed impressions. It’s ambitious, thoughtful, and built on a fascinating technical foundation, yet it still feels more like a work in progress than a finished product. Some tools, such as Notes or Reminders, don’t always behave predictably, and many features are hidden behind layers of menus. Others, like Spotlight and Shortcuts, are genuinely useful but still fall short of what seasoned users can achieve with third-party apps and bleeding-edge AI models.

What Apple is doing, though, is laying down the groundwork for something bigger. By building a shared framework and running models directly on the device, it’s creating a future where AI quietly blends into macOS rather than sitting on top of it. With time, and as developers start to embrace the Foundation Models Framework, Apple Intelligence could evolve into a background companion that enhances the way macOS works and makes our everyday tools smarter from the get-go.